su_chef prototype 1: My robotic arm that detects apples and picks them up

building an image detection robot

In my previous post, I described my idea to start a worker-owned automation company by building a robotic food truck. I've started designing the interior of the truck and simulating it, but before I finish that, I wanted to get some experience working with a functional robotics tech stack.

After consulting with my friend who is getting a PhD in robotics, he suggested I get my feet wet by building a sous-chef. I thought that was perfect! But it's not sous (meaning under in french), it's su (meaning substitute user in Unix-speak).

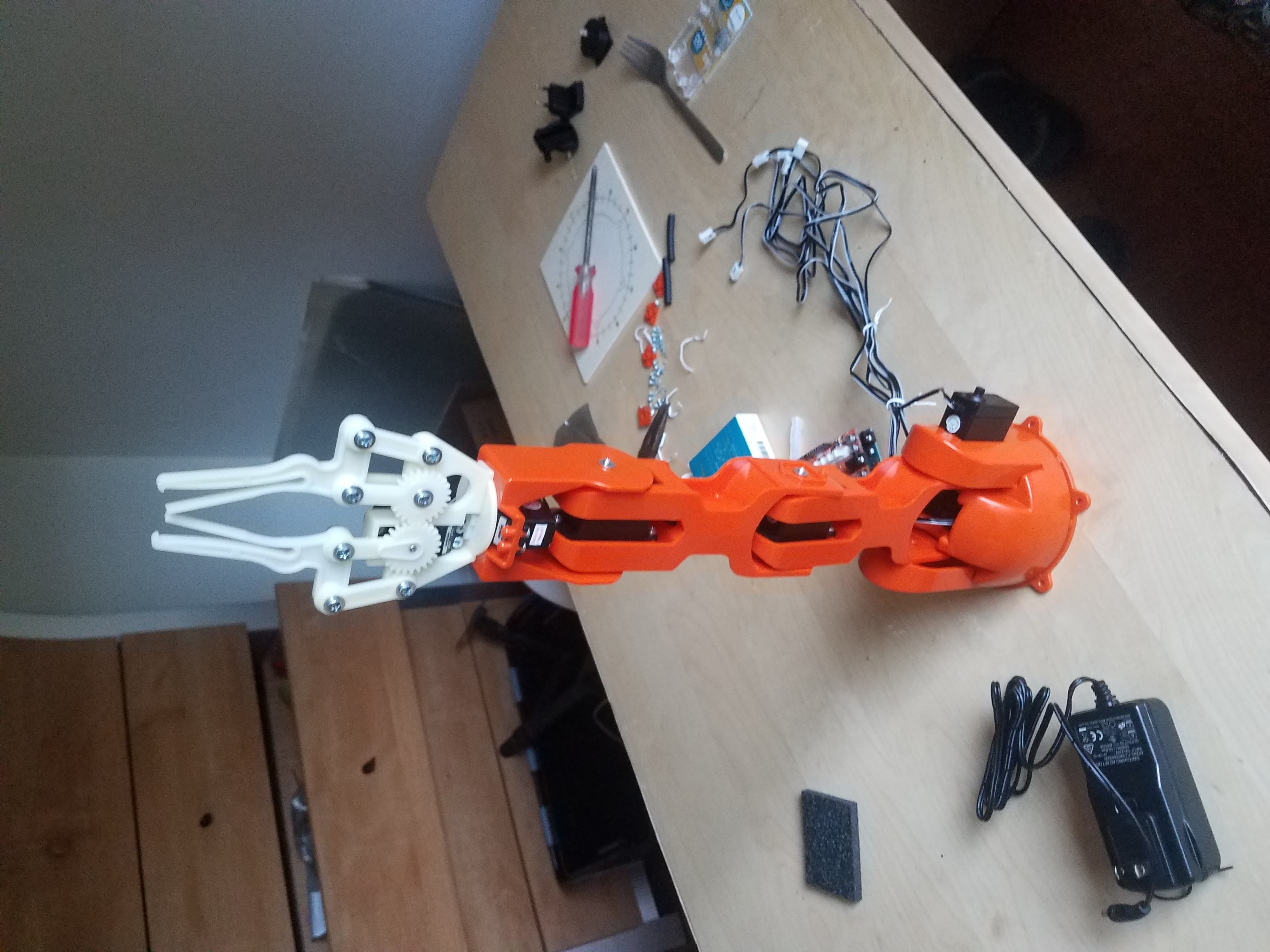

su_chef v0.1.1

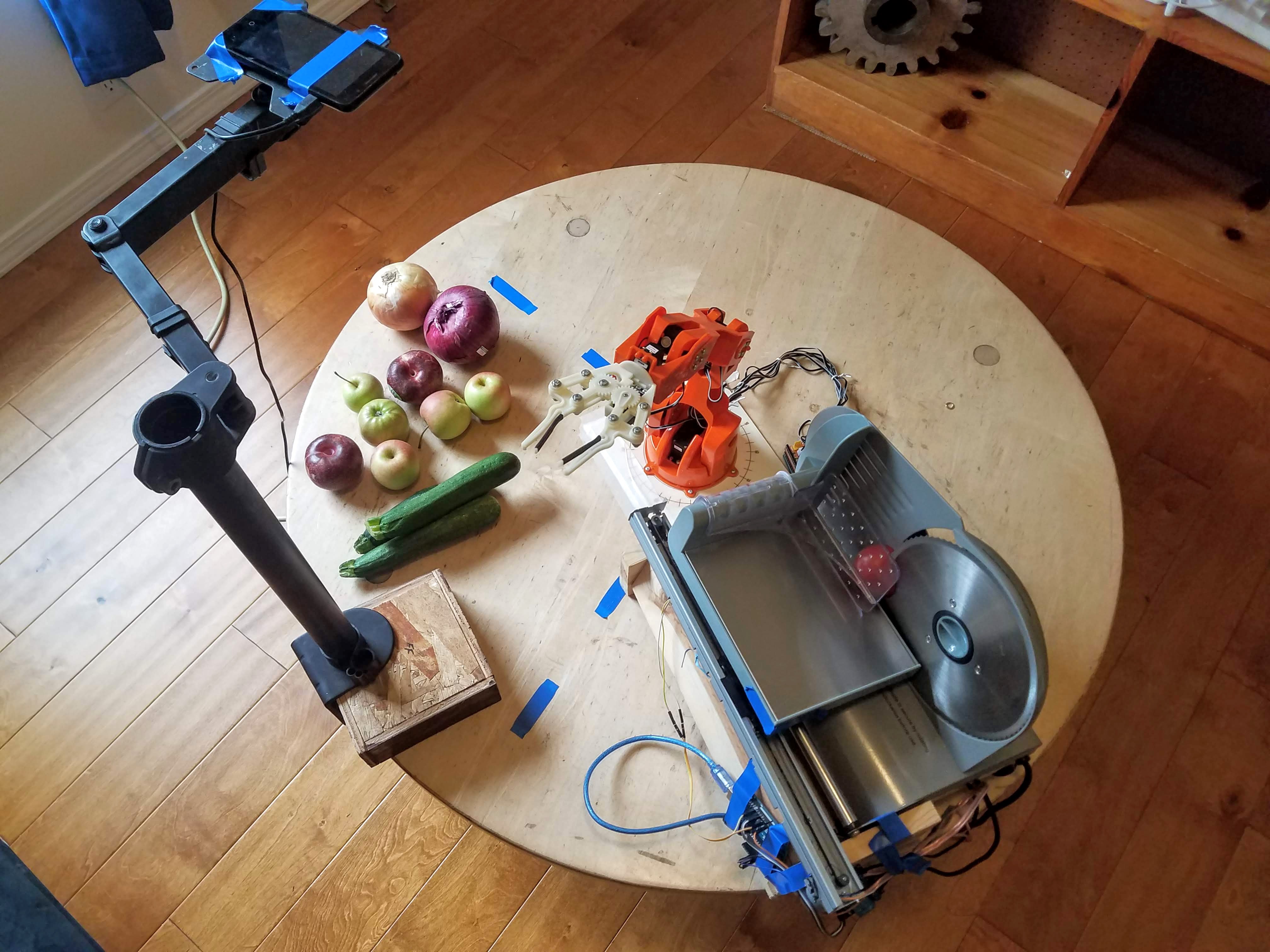

Thanks to the amazing open source ROS and Arduino communities, it took me a little over a week to go from just parts and an idea to this functioning prototype.

The Arduino Braccio arm works pretty well for the task and was fairly easy to set up. The ROS stack was more complex to set up but it allowed me to leverage a number of existing project and combine them. Finally, the reason that this project is (comparatively) cheap and easy to produce is that it is operating on a single surface. All the objects for detection are on the same plane so it becomes much easier to calibrate a mapping from a 2D image without the need for a fancy depth-aware camera.

In the rest of this post I'll break down how the project works at a high level. But if you want to build it yourself and see how to run it, you can go straight to the instructions in my source repository.

Project Build

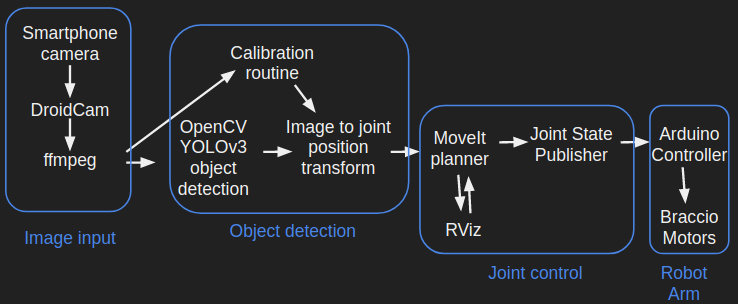

To connect the many parts of a production automation stack, robotics engineers will often use some kind of framework to coordinate tasks. For this project, I used an open source robotics operating system called ROS (Robot Operating System).

The amazing thing about how ROS works is that messages from many different subtasks are passed through a shared communication system. So essentially, I just take usb images with one interface, pass them to a script for image detection, pass the output detections to a control script, and pass the control instructions to the Arduino. Importantly, all of those subtasks run independently with messages passing back and forth, and I don't have to deal with any of the communication handling. It's kind of magical.

Here is a diagram of the system architecture for this project.

In the following sections I'll go over some of the main components.

Braccio Arm build

For this project, I used a 5 degree-of-freedom (5 DOF) robotic arm called the Arduino Braccio. The arm is driven by an Arduino Uno which can be controlled from my laptop via a USB cable. The arm came with an end gripper that is capable of picking up objects of at least 1kg. It cost less than $250 and took about an hour to put together.

The Arduino library for Braccio comes with basic tests that run directly on the Arduino to ensure that motors work.

The Braccio Library demo program could control the motors with preprogrammed positions, but they didn't provide an interface to control the Braccio with external inputs. For that I turned to existing ROS packages which worked by sending messages over the USB /dev/ttyACM* port.

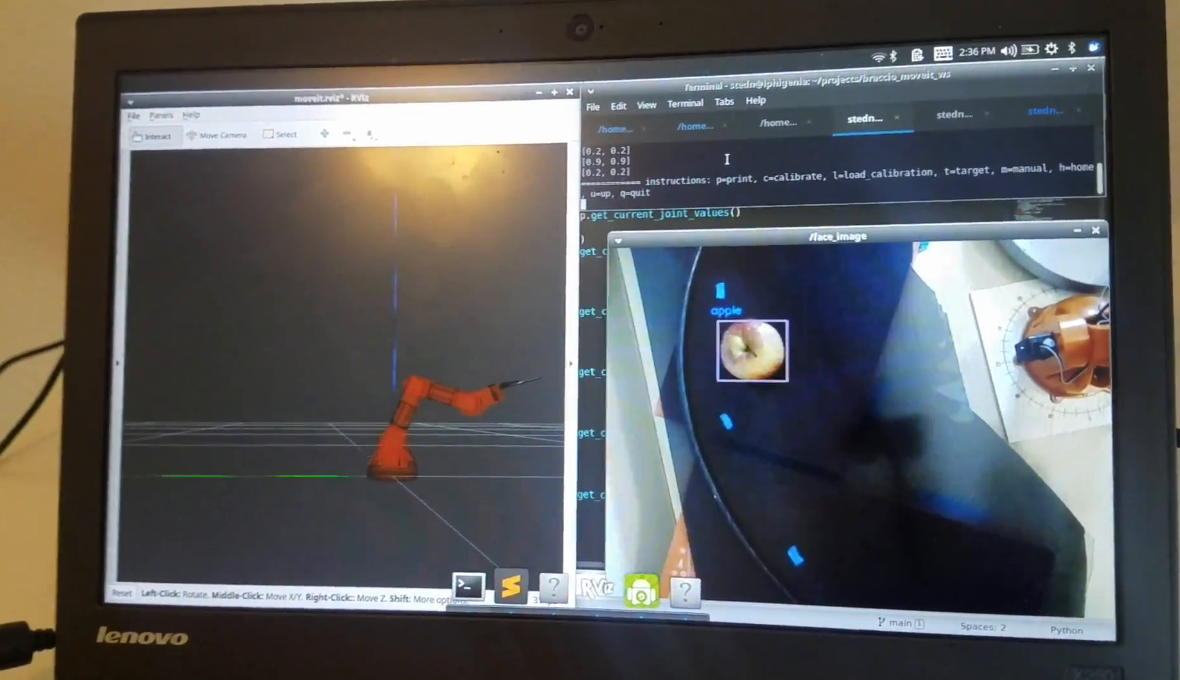

Braccio Arm control with ROS RViz and MoveIt

I found a ROS-based project that allowed direct joint position control for the Braccio using RViz to vizualize its URDF. However, this project didn't easily show how to do motion planning or programmatic control so I combined that with another project that integrated with ROS MoveIt to allow connection through MoveIt's python interface. This subcomponent can be tried out in simulation using just RViz and an included URDF file.

When connected to the real Braccio arm this allowed me to directly program a few basic motions to pick up items.

However, this method required manual specification of the arm position for each item to pick up, which isn't at all useful for grabbing and passing arbitrarily position items. For that I needed to add image detection.

OpenCV image detection integrated with ROS

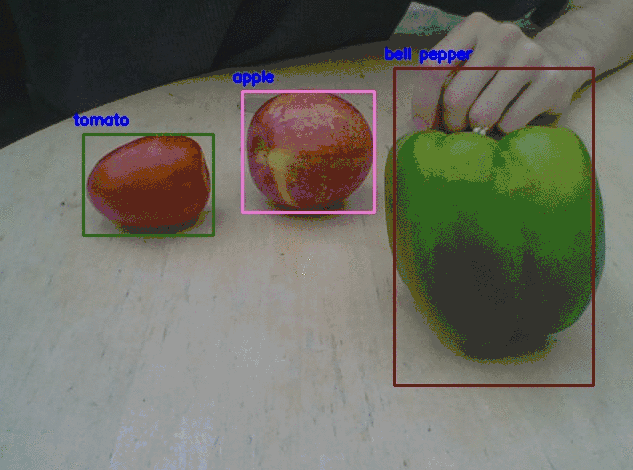

Again, I combined a few projects together here. I started with a project called Braccio CamAI that was set up to use webcam object detection to control the Braccio. Unfortunately, the code relied on a standalone Edge TPU to run the image detection algorithm, which was expensive and a bit overkill for the task in my opinion. I didn't want to buy one of those so I converted the modeling task over to OpenCV's YOLOv3 implementation based on this project.

The YOLO object detection algorithm applies a deep neural network to the image and identifies bounding boxes around any objects in the image that it is trained to recognize. The best thing about it is that it runs on the whole image just one time (hence the name You Only Look Once). This makes it fast enough that it can make repeated detections on streaming video even just on my wimpy CPU. For my purposes I started with a pre-trained model that had been trained with the capacity to identify apples amongst other things.

Calibration and Transformation

The final and most difficult piece was the connection between image detection and Braccio control. This requires both transformation of object location from the image frame into a frame relative to the arm, and tranformation from desired arm grasping location into joint angles for the motors.

To compute the mapping from image to real space, I designed a calibration protocol that moved the arm to various locations and then required me to click the location in the image. I recorded the locations of these points in image space and then used OpenCv's cv2.findHomography and cv2.perspectiveTransform functions. You can find more information on using those for python here and here.

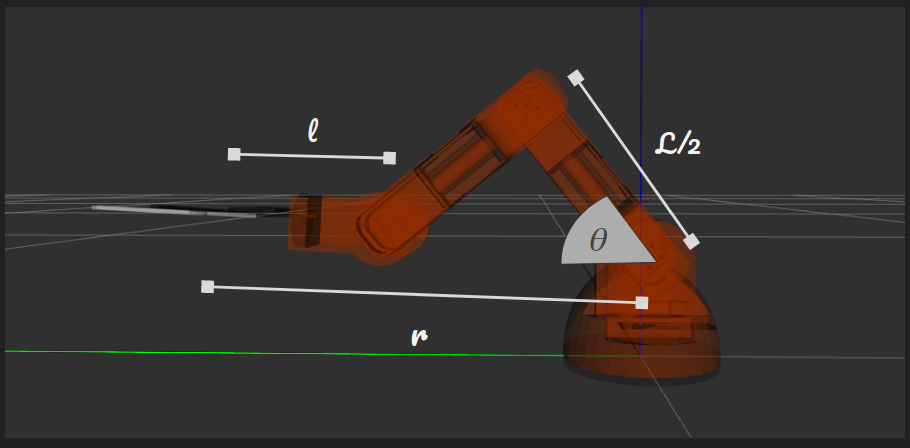

Finally, to tranform from position to joint angles, I converted to polar coordinates with the arm base as the origin. I also manually computed the shoulder, elbow, and wrist angles required for the gripper to position at a certain distance from the base based on the geometry of the arm itself and their joint angle limitations.

Unfortunately, the Braccio has a limited annulus of positions from which it can lift objects off the 2D plane. To address this, I added a small tweak that caused it to "push back" an object if it is too close to pick up.

With all these parts combined, the arm is able to identify and pick up apples. At this point, I still use the interface to manually control it, but it would be possible to layer on more logic to determine when and where the apple should be picked up and delivered.

Next Steps

This is really just the start of the su_chef project. In another post I'll be elaborating on the whole design of the project, but here is a rough outline of the other things that need to be built.

Identifying more objects

The first improvement will be adding more objects to identify for pickup. Currently I've only implemented pickup of apples, but there are a few more food items I'd like to be able to find. Unfortunately, this will probably involve training my own YOLO model on a specialized dataset.

Building a veggie slicer

This won't involve robotic arm control per se, but it is still very important. I essentially want to build something like a food slicer that can have veggies dropped from the top and output into a bowl below.

Moving bowls

After chopping, the ingredients should drop into bowls below. Only one set of ingredients should be chopped at a time so the bowls will need to be repositioned on the opposite side of the robot.

Spooning

Probably the hardest mechanical challenge will be spooning the chopped veggies from bowls onto the plate. I suspect that I'll need to design a special spoon that can be reliably lifted by the arm.

Smarter controls

After the mechanical components are taken care of, I'll need to wrap the logic behind the whole device together. This will entail some kind of intelligent detection for when ingredient bowls are empty, and identifying which item to pick up and chop.

will stedden

will stedden