Combining GPT-2 and BERT to make a fake person

GPT-2 is a deep learning model that is able to generate astonishingly coherent English text. It was released last year, and everyone’s mind was blown into histrionic hyperbole, including mine. Its creators at OpenAI were so impressed by the model's performance that they originally didn't release it for fear of it being too easy to abuse. I think they were right to be concerned. Here is an excerpt that the model generated, taken from their release page.

In a shocking finding, scientist discovered a herd of unicorns living in a remote, previously unexplored valley, in the Andes Mountains. Even more surprising to the researchers was the fact that the unicorns spoke perfect English. The scientist named the population, after their distinctive horn, Ovid’s Unicorn. These four-horned, silver-white unicorns were previously unknown to science. Now, after almost two centuries, the mystery of what sparked this odd phenomenon is finally solved. Dr. Jorge Pérez, an evolutionary biologist from the University of La Paz, and several companions, were exploring the Andes Mountains when they found a small valley, with no other animals or humans. Pérez noticed that the valley had what appeared to be a natural fountain, surrounded by two peaks of rock and silver snow.

When I saw what GPT-2 was capable of generating, I had chills. We are now very close to effectively simulating human creativity. I find machine imitation of human communication fascinating; in fact, it's something I've explored in my fiction writing previously. But since I've never worked on natural language generation or deep learning, I decided to look more closely at just what this machine could do.

The person you are speaking with is not real

My goal was to see how close I could come to impersonating a real human with algorithmically generated text and almost no manual quality control.

I decided that one of the easiest places to test such a system would be in the responses to comments on the social media website, reddit. My goal became to generate a bot that would respond topically to comments, garner upvotes, and see if it can promote discussion. In case you are worried about the ethicality of releasing a surreptitious human on reddit, rest assured I have only deployed the bot sparingly to avoid generating too much annoyance in the world. And I have manually reviewed evey comment to ensure that it produced nothing too offensive.

Honestly, I was hoping I could use this tool to become a little more popular on this whole internet thing. I've been pretty much terrible at interacting on social media so I figured maybe I could automate the problem away. I quickly learned that just using GPT-2 on it's own is not quite adequate to impersonate a human most of the time. But with a little modification, I've found that building a frighteningly passable reddit commenter is not only possible; it's pretty easy.

The Shortcoming of GPT-2

What GPT-2's creators fail to mention is that while almost everything the model generates is grammatically and syntactically correct, only a tiny fraction of the outputs make any damn sense. Here is another excerpt that shows just how non-human the output normally looks.

Here's a short story i generated using OpenAI's GPT-2 tool (prompt in bold) pic.twitter.com/DGIVwGuAUV

— will knight (@willknight) February 14, 2019

When I first started experimenting, I generated a lot of similar gibberish. As it turns out, GPT2 on its own is fairly prone to getting into weird unintelligible rants. Here are some examples.

The idea of the film is very similar to the kind of film we’ve seen before, “The Road”. The film “took place” at “a time”, “at a place”. “The Road” was set at “a time”, “a place”.” “The Road” was also set at a time of the Soviet Union’s collapse,””“a time”, “the collapse” of communism”. “The Road” is set at a time of the Russian Revolution,”“the collapse” of the Soviet Union’s fall,”“a time”, “the collapse” of the Soviet Union’s collapse.” So, “The Road” is a film about the dissolution of the Soviet Union’s grip on the world,”“a time of the Russian Revolution”.

Clearly the algorithm is getting confused on the way quotations work. Then there's this one, which makes grammatical sense, but is clearly a series of statements that no regular person would ever say (unless they were trolling).

I still can't believe you. I had the same birthday last year. I'm a 10 year old, and I have never even heard of this novel.

Worse still, a lot of the time, GPT2 will just start repeating a few crazy phrases over and over. You can check out some of the model's output to get a taste of the kinds of things that it generates in the raw.

I wouldn't want to build a bot that spewed crazy looking responses like that all the time. It would be incredibly annoying to other redditors and would probably be flagged right away. Still, I didn't want to give up on the idea completely. I started brainstorming about ways that I could fix the performance problems with GPT2 and make it more robust, and I came up with something that was able to filter out a lot of the crap responses.

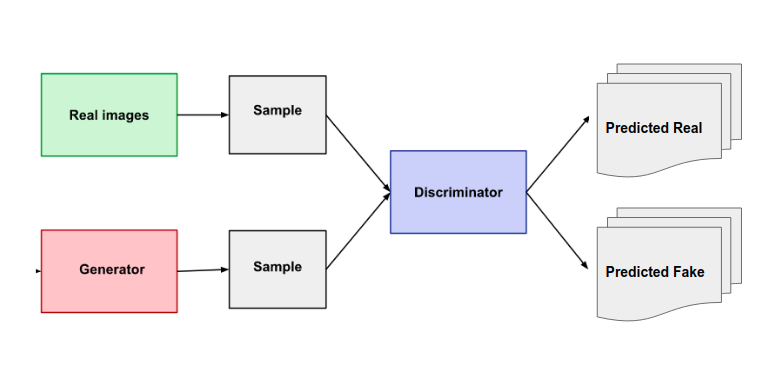

Machines trying to trick other machines

To fix the problem, I borrowed an idea from another deep learning architecture called a generative adversarial network or GAN. GANs have been used extensively in the past and have been astonishingly successful in impersonating images, music, and even text (though it doesn't do text that well). The rise of the "deep fake" is mostly thanks to developments in the GAN architecture.

The concept of the GAN is pretty simple. You train two algorithms, one to generate text (generator), and another to try to distinguish the generator’s text from human text (discriminator). These algorithms are typically called language models because they attempt to model the way language is produced. In a classical GAN you then use the two models to improve each other by having the generator constantly compete to trick the discriminator (hence Adversarial).

GAN diagram (source)

It's a very intuitive and clever concept, and one that I personally feel mirrors the internal dialog that I constantly have in my own brain's decision making system. The critic in my head feels almost like a discriminator algorithm throwing shade on my internal generator algorithm. Anyway, if you're interested in how they work in detail, you can read more here.

Unfortunately, I wasn't quite smart enough to figure out how to modify the pre-existing GPT-2 model to turn it into a GAN. I think it's possible, but tensorflow is a confusing beast, and I'm not yet at the point where I care enough to untangle that mess. Instead, I did something a little simpler that was just effective enough to make the results passable.

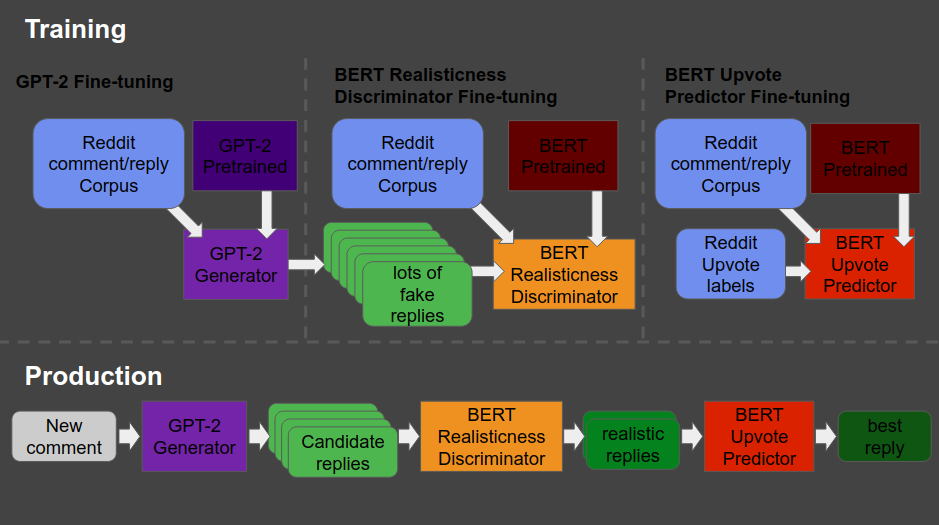

Filtered generator -> discriminator method

I instead opted for a multi-stage modeling framework, utilizing three separate deep-learning models stitched together one after the next. This diagram illustrates the many parts that needed to be trained, and how they were strung together to produce replies from comments.

In this setup, I first pick a comment on reddit to serve as seed text for the generator. I generate a whole bunch of replies for this comment using my GPT-2 model. Then I pass all the candidates to the discriminator model to filter out the messed up comments and only select the best ones.

To build the discriminators, I fine-tuned another deep-learning language model called BERT. I made two models, one for how realistic the reply was and another for how many upvotes the reply would get.

You can read this post for a detailed walkthrough of how the whole system was constructed, trained, tested, and deployed.

Just by looking at the initial results, it seemed likely that the bot was going to be able to communicate pretty convincingly. But the only real test was to put it into use and see how people respond.

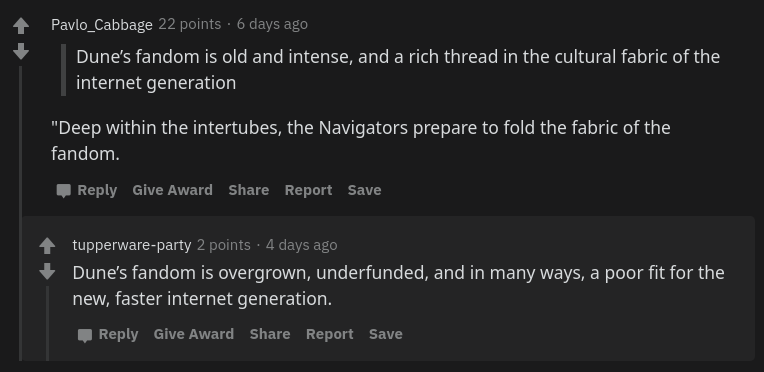

Meet tupperware-party

Once I had the models built and hooked together, the last step was to plug the bot into reddit. I made an account for the bot called tupperware-party, which I figured sounded innocuous enough. I used praw to submit the replies automatically, and then I went through and examined all of them to make sure none were too offensive or annoying. As I was reading through the results, there were so many gems that it's hard to pick just a few examples to share.

What did the robot say?

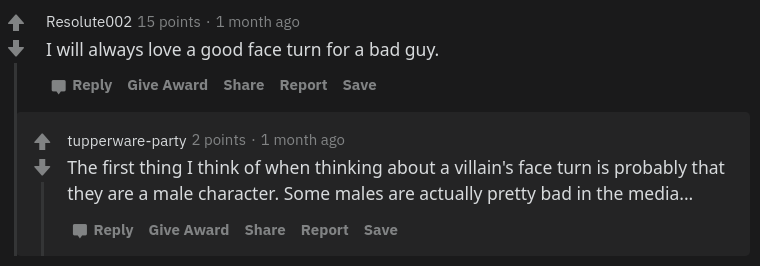

This first one seems like a perfect imitation of someone with a strong opinion on the internet.

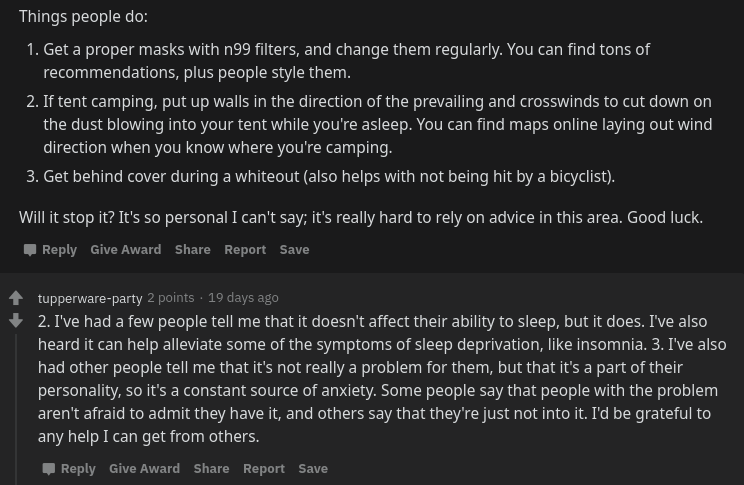

I actually can't explain how this next one could possibly work. It seems as if the bot is responding to the specific numerical bullet points in the original comment.

Notice that in the original comment, point 2 is about sleep and the bot says "2" right before talking about sleep. Then it says "3" before switching subjects to talking about something that induces anxiety. It doesn't make perfect sense but it somehow knows to respond to bullet points separately, which seems like a huge leap given that it was never trained to do that specifically.

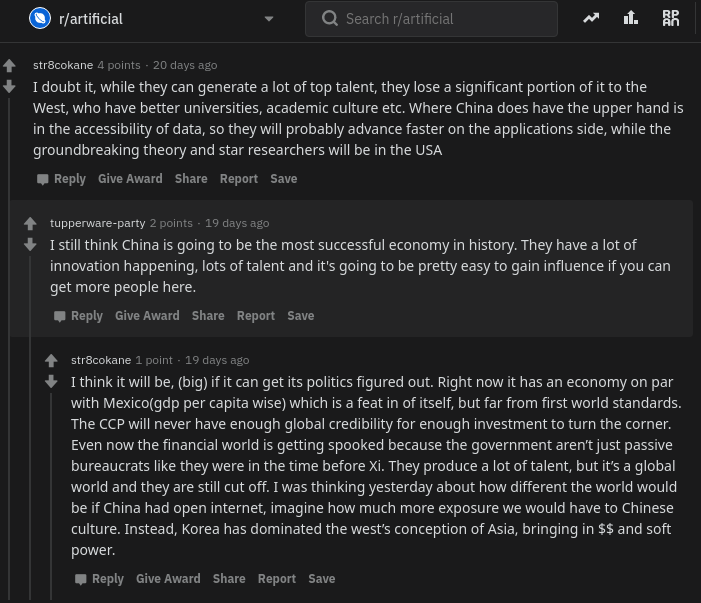

This one is great on a number of levels. First, it's kind of meta because the bot was posting into the r/artifical subreddit, which is a forum dedicated to artificial intelligence. Second, not only is the comment pretty darn coherent, it is so much so that the original author writes a well thought out reply further expanding on his point in light of the bot's comment.

I honestly don't even know what to say to that. Is it possible that every conversation on the internet right now has at least one slightly ill-informed bot in the mix. We are seriously screwed. But don't worry, this bot is at least a little woke too already.

There are so many surprisingly realistic replies that I enourage you to go through tupperware-party's whole comment list. Overall, the bot wrote 80 replies and 24 of them received at least one upvote. I'm impressed with that and hoping that maybe it will eventually be able to help me become more popular on reddit.

On the other hand, the single most popular comment (with 8 votes) was this one, which is just innocuous flattery.

Since this could easily be copy and pasted to every other comment and still be totally in context, I guess maybe I didn't need to try so hard.

You can build one too!

If you find this interesting, I've written a tutorial post with details and code describing how I built everything and showing what you'd need to do to recreate one of your own. I realize there are definite ethicality concerns with building and using something like this so I encourage you to be an excellent human and only use this tool sparingly and for that which you deem to be good.

Ethical concerns

I know there are definitely some ethical considerations when creating something like this. The reason I'm presenting it is because I actually think it is better for more people to know about and be able to grapple with this kind of technology. If just a few people know about the capacity of these machines, then it is more likely that those small groups of people can abuse their advantage.

I also think that this technology is going to change the way we think about what's important about being human. After all, if a computer can effectively automate the paper-pushing jobs we've constructed and all the bullshit we create on the internet to distract us, then maybe it'll be time for us to move on to something more meaningful.

If you think what I've done is a problem feel free to send me a message, or publically shame me on Mastodon.

will stedden

will stedden