The Barbie Hadoop Cluster - Stage 1

Ever since I took Coursera's Intro to Data Science course, I've been dreaming of getting my own MapReduce system up and running. It just seems so much more rewarding to have my own data framework right in front of me rather than paying for Amazon AWS or Google Cloud.

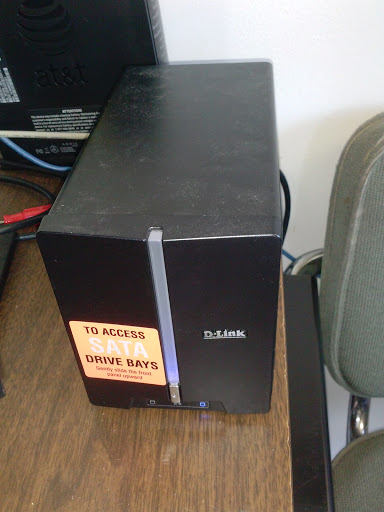

Recently the non-profit I volunteer for handed me 9 left-over recycled computers and a lot of left-over hard drives that they needed to find a home for. So while they were sitting in my house, I decided to put them to use in my dream Hadoop Cluster.

So here is my step-by-step protocol for setting up the cluster from scratch. In this post I'm going from Ubuntu install CD to Hadoop "pseudo-distributed" mode. In Stage 2, I move to a four node distributed cluster, and in Stage 3, I'm going to launch my own MapReduce job.

The Barbie Cluster

First off, the most important thing in sysadmin is the honor of naming computers. In shock/disapproval/sarcastic honor of Mattel's failed attempt to update their outdated views about women, I decided to name all of my computers after Barbie characters.

As of right now I have four computers set up,

- Barbie

- Ken

- Skipper

- Kelly

And fortunately, mass toy consumerism will allow me to expand my cluster to effectively any size.

The operating system

I originally tried to get the "Barbie Cluster" up and running with Puppy Linux. I chose Puppy initially because most of the machines lacked a DVD drives or USB booting, which ruled out more of the "filled out" Linux distributions. Puppy did install pretty easily and I would recommend it for someone who needs a lightweight, functional OS. But eventually, I, like many before me, decided that Puppy was just too much of an undocumented burden to run Hadoop, and I scrapped it for an Ubuntu command line install. (You can't get a lower memory OS than command line, I say)

To get Ubuntu installed I downloaded the Ubuntu 14.04 Minimal Install CD, which I found here. I burnt the .iso file to a CD, and popped the CD into the CD drives of my hungrily waiting Dells.

After she boots to the CD, select Command Line Install. The setup is pretty straightforward and I didn't need any special choices except naming the computers and users. I partitioned the disk with "Guided, Use Entire Disk and LVM," but I'm not sure if that was necessary. No reason not to do that though since that's what most Linux systems work on.

Before Getting to Hadoop

There's been a suggestion that you should create a dedicated user for Hadoop on your machine. Since this system doesn't have any other user, that seems unnecessary. I didn't bother with that, but I did create a hadoop group and add my doopy user to that group for consistency.

$ addgroup hadoop

$ usermod -g hadoop doopy

I set up and auto-login for myself based on a suggestion I found here. This isn't necessary, but I'm not going to have monitors and it's possible I might want things to run automatically in the background without having to ssh in. To have the machines log on automatically, edit the file '/etc/init/tty1.conf' so that the last line reads

exec /sbin/getty -8 38400 tty1 -a doopy

Finally, the most important thing is to get the prerequisite software installed. To do that just type

$ sudo apt-get install openjdk-7-jdk ssh git

This should get your java and ssh set up. I also included git because everyone eventually needs it anyway.

Finally, you need to set up ssh to allow this machine to ssh itself without a password. (I know, it's the weirdest thing ever, but this is how Hadoop works. I suppose it's probably genius on a level that I don't even understand.)

$ ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

$ ssh localhost

Make it yourself

After apt-getting java and ssh, the only other thing you need is the Hadoop package itself. Unfortunately, the binary distribution that I got from Apache started throwing errors after I installed it. I hunted down the errors and found that the problems arose from a 32-bit vs 64-bit conflict.

To solve the problem I recompiled it myself based on the ideas I found here. The Hadoop Github had slightly better instructions, that nailed down the steps that I had to take. I put my own compiled distribution up on the MakeLofT GitHub.

So if you want to skip all the hard work you can get the whole thing set up with this line...

sudo git clone https://github.com/lots-of-things/hadoop-compiled.git /usr/local/hadoop

EDIT: Nevermind, I edited that file so that settings now work for my current production setup.

You also may need to change the ownership of the /usr/local/hadoop file you just added.

sudo chown -R doopy:hadoop /usr/local/hadoop

Finally, you need to add some of these files to your .bash file. You can do this by appending the included file called addToBash to the end of your .bash file.

cat addToBash >> ~/.bashrc

Modifying Hadoop's setup

I picked and chose the setup protocol from these three tutorials to tease out exactly what I needed to do. It's so odd that they can all be telling you to do the same thing, and yet still disagree on so much. Importantly, I found that all the information I could find on the YARN setup was pretty much totally off. I had to activate an additional JobHistoryServer that nobody mentioned.

- Michael Noll's tutorial was kind of out of date

- This one seemed to have you doing extra unnecessary things

- Apache's setup instructions were the shortest and least filled out

It was totally unclear to me why each tutorial asked you to modify the files in slightly different ways. Hopefully, I'll come back and edit this to explain all those knobs to other beginners.

For now, my distribution comes preset to work with Hadoop YARN in pseudo-distributed way. You can look at the other tutorials to see the different things they did, but all I did was edit the following four files to fill out their configuration tag as shown.

etc/hadoop/core-site.xml:etc/hadoop/hdfs-site.xml:

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

etc/hadoop/mapred-site.xml:

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

etc/hadoop/yarn-site.xml:

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

<configuration>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

</configuration>

Youhave to make the next to files if you want to run YARN.

sbin/start-jobhist.sh:sbin/stop-jobhist.sh:

bin=`dirname "${BASH_SOURCE-$0}"`

"$bin"/mr-jobhistory-daemon.sh start historyserver

bin=`dirname "${BASH_SOURCE-$0}"`

"$bin"/mr-jobhistory-daemon.sh stop historyserver

Running the example

To test that this was working I simply ran the example task that all three of those tutorials suggested. You can find it on any of those sites but here I've reproduced it for you.

The following instructions are to run a MapReduce job locally. If you want to execute a job on YARN, see YARN on Single Node.

1) Format the filesystem:

$ bin/hdfs namenode -format

2) Start NameNode daemon and DataNode daemon:

$ sbin/start-dfs.sh

3) Start YARN daemon:

$ sbin/start-yarn.sh

EXTRA) Start JobHistoryServer:

$ sbin/start-jobhist.sh

4) Make the HDFS directories required to execute MapReduce jobs:

$ bin/hdfs dfs -mkdir /user

$ bin/hdfs dfs -mkdir /user/<username>

5) Copy the input files into the distributed filesystem:

$ bin/hdfs dfs -put etc/hadoop input

6) Run some of the examples provided:

$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0.jar grep input output 'dfs[a-z.]+'

7) View the output files on the distributed filesystem:

$ bin/hdfs dfs -cat output/*

8) Stop YARN:

$ sbin/stop-yarn.sh

9) Stop dfs:

$ sbin/stop-dfs.sh

I was able to monitor the jobs from my laptop over my network by going to http://10.0.0.1:8088. Of course, I replaced 10.0.0.1 with the local IP address for that machine.

Troubleshooting

Something in this tutorial is going to become outdated in the next six months. My best advice is simply to keep searching, my friend. Someone else has debugged it before you.

And now, after just a week, I have four individual machines running Hadoop by themselves. Next week, I'm hoping to get them talking to each other and running another example problem across a distributed file system... Well, I can dream can't I?

will stedden

will stedden